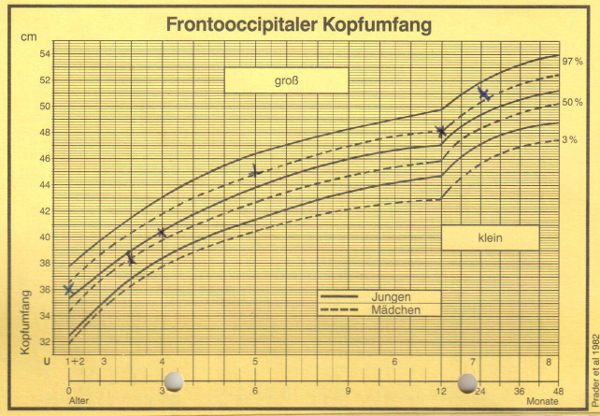

The diagram above shows the development of the frontooccipital circumference of a child’s head. We measure the growth of our children to track their healthy development. Only with the context of benchmarks does the data become meaningful. Without sharing, it is useless.

“Why Quantified Self Is The Next Big Thing” tells Michael Reuter in the last post on this blog. And I agree: The economic drive but even more the social incentives we earn from QS will lead its evolvement to ubiquity.

With this in mind, we should take a step back, and pause for a moment to think through some of the consequences a quantified society will bear on our lives, and on alternative routes this development might take along its path.

This post touches topics I took from a conversation on Twitter I had with Whitney Erin Boesel and Anne Wright (see here).

Quantified Self or Quantified Other?

“Quantified Toilet” was a nice piece of design fiction: big data collection from analyzing feces in a public toilet. It would not have been a good hoax if there wasn’t a short link to reality. In public space as well as in privatly owned para-public spaces like shopping malls, we are constantly quantized and monitored via a multitude of sensors. Traffic patterns, footfall, cellphone usage, noise level, but also telling environmental variables like micro-temperature and air moisture. Our phones also permanently track data not only about ourselves but also about other phones within reach. So we should be used to getting tracked. And there is hardly much difference between the CCTV surveillance we experience all the time and that we seem to mostly have accepted on the one side, and lifelogging, i.e. carrying a camera with us that takes pictures all the time, on the other. Nevertheless: there is very good reason to criticize the one and the other; both may be a gross violation of our right for informational self-determination.

However, we might think tracking only our personal data for our own purposes is different from tracking others. So lets think about social media: Twitter, Facebook, Linkedin, they are not called “social” without cause. These platforms only work for us, if we connect to others, and communicate. Our timeline is not a monologue – we are interacting with others. If you try to opt-out, all these connections you built while you were using the platform would stay, no matter if your profile would be deleted. And even further, your position in the social graph of others will take some effect, even if you had never signed in for an account; reconstructing a missing object from data collected arround the object. If we connect to a team of trackers with devices that support social sharing of data, our contribution might thus tell about others without their being aware. If we share our sleep-tracking with the Jawbone Up, our social graph will certainly make possible some predictions on the behavior of our peers that we added to our team. And if we send the cotton bud with the smear of bacterial fauna habitating on and in our body to µBiome for analysis, there will be characteristical biological traces of other people that we interacted with – shaking hands, kissing …

“Surveillance Marketing”

There are two narratives, why the QS data is valuable. The first is health applications. I am convinced, that Michael is right. I have even written that the data shared and collectively used for the public good might save the planet. The second story is about marketing. I make a living from predicting behavior from data. It is called social research, market research, advertising planning, targeting. It is my profession. It is not evil to bring goods to the market. It is not evil that facebook sells targeted ads. Nevertheless people might start to wonder if the deal is fair that they get offered from the platforms: “Data is made of people!”

Many of the gadgets and platforms in QS are funded by VCs. Of course most of them will have to get sold, otherwise the investors would not see their money back. Not even crowdfunding might save a start-up from that fate as we have seen very prominently demonstrated with Oculus.

So I think we can expect people demanding back control or even money. We should support an open source and open data QS culture, and build business model arround that. These businesses will be ethically correct and prove more robust. (The term “Surveillance Marketing” was coined by Bruce Sterling at Wired Nextfest in Milan right in our context).

Algorithm Ethics

At last year’s QS conference, Gary Wolf told of his experiment in taking the different gadgets to track walking and running and compare the results. As expected, there were huge differences. A similar observation with commercial gene-sequencing services lead to an investigation of the FDA. We can’t build technology without bringing value-judgments into it. I have written and talked about that extensively. It is important not only to know this fact. We should always ask: could this technology be done in a different way? And we should demand for laying open the algorithms. There should not be a black box when our most intimate data is concerned. In IT security, open cryptographic algorithms are the only protocols that can be trusted; this is widely accepted. Open QS algorithms should be thought of the same way.

Liberal Fallacy or Empowerment?

My fourth point is about self-determination and resoponsibility. QS is a great way to support people’s health. We have heard fantastic stories, how tracking your body, your mind, your mood can help people getting back autonomy to lead a self-determined, active life. We should not corrupt the great contribution QS can add by turning it against people.

What if someone cannot change her life even if she tried? The most common form of this argument, I tend to call the liberal fallacy goes like this: “Obese people should own scales, track their weight, food intake, and training, and then they will overcome their condition. If they don’t succeed, they are just lazy.” I have heard obese-blaming frequently; and although other forms of abelism are less accepted, it is still common to presume responsibility for failure with the one who failed.

Today, parents have to defend themselves for denying prenatal diagnostics. I have heard people blaming parents for giving birth to a child with a Down Syndrom. We must not allow people to force a decision like taking an abortion to others just because they would value humans’ lives only according to the fitness and health.

We should take care to help people with QS without patronizing. We should not imply that everybody is master of their lives. We should not hand our responsibility to care for others off to technology, leaving people alone with their problems, because we think that they can now achieve through technology in what they had been failing before. Our responsibility to respect others, to not discriminate against anybody, not segregate from anyone, and accept others’ personalities and weaknesses, this will not change with QS; it might rather become more difficult.

There is no “virtual reality” we could just unplug from. Our being embedded in data is the only reality there is. There will be no opt-out. So we should help people to understand their quantified lives. We should lead the discussion which ethics we want to govern our lives.

If we act responsibly with our technology and our practices, if we stay alert to the intentions behind it, if we keep the discussion open, fostering transparency, and if we sympathize with others rather then judging them, The Quantified Self will unfold to more than just “the next big thing”.

[Note: I had written a draft of this post after the twitter conversation here; when I opened the draft today, all was gone, exept one number: “42”. … it is not about the answers, it is about the questions …]