In the first part of this Cosmic-X blogpost series, we evaluated various blockchain platforms for their suitability in Industry 4.0 and explained why we chose the Secret Network with its confidential computing capabilities. Today, we’ll explore how we use the Secret Network to secure machine data integrity from its origin to its consumption.

Need for Data Integrity

Securing data integrity in Industry 4.0 is crucial because systems and devices rely on accurate data to function effectively. Tampered or incorrect data can lead to poor decisions, operational failures, and vulnerabilities in key sectors like manufacturing and logistics. With IoT and AI driving Industry 4.0, maintaining data accuracy ensures reliable operations, protects sensitive information, and prevents cyber threats that disrupt businesses and critical infrastructure.

Anchoring data close to its source is essential for securing integrity across the entire data processing chain, which often involves multiple distributed systems. For machines, this means securing the data before it leaves the device. At the same time, the system must protect the anchored data from tampering after export. Blockchain’s immutable nature aligns perfectly with this paradigm. That’s why we built a Wallet Service on top of the Secret Network. This service integrates seamlessly into any machine to secure its data integrity in a decentralized and privacy-preserving manner.

Wallet Service

The Wallet Service acts as a gateway for communication with the Secret Network. It deploys onto any machine infrastructure that supports Docker. By using the Wallet Service, machines interact directly with the blockchain and its smart contracts. The service assigns each machine a unique identity through a public-private key pair. With its private key, the machine signs and broadcasts transactions to anchor its data on the Secret Network. The blockchain’s encryption ensures that no unauthorized third party can access the data. For details on how the network reaches consensus despite encryption, refer to our previous post.

Integration

To simplify integration, the Wallet Service offers a straightforward REST API with two endpoints. The ingress endpoint accepts a batch of data in a defined structure for anchoring. After receiving the data, the Wallet Service hashes it and stores the resulting hash in the service’s smart contract through a transaction on the Secret Network. This process creates an immutable fingerprint, allowing anyone to verify the integrity of a data batch through the Wallet Service’s verification endpoint. Since data verification typically occurs in systems other than the one that supplied the data, the Wallet Service supports deployment anywhere. In distributed data processing scenarios like Cosmic-X, entities that consume data instantiate a Wallet Service to verify data integrity before making decisions. For example, an AI service provider might deploy a Wallet Service in its cloud environment to verify data before using it for training or inference.

Requirements

Two conditions must be met for this workflow to function: first, the verifying Wallet Service must have the appropriate viewing key from the machine that supplied the data. Otherwise, it cannot decrypt and query the fingerprints stored in the smart contract. Second, the format and schema of the data batch must remain standardized across the processing chain. To achieve this, we developed a Data Integrity Protocol as the foundation of the Wallet Service.

Data Integrity Protocol

To anchor and verify data batches reliably, the Wallet Service requires a standardized protocol. Both the data anchoring and verification processes must adhere to a common data format, schema, and canonicalization standard. For Cosmic-X, we chose JSON as the data format and RFC 8785 as the canonicalization algorithm. Canonicalization ensures reliable cryptographic operations on JSON data by defining methods for handling whitespace, data types, and objects.

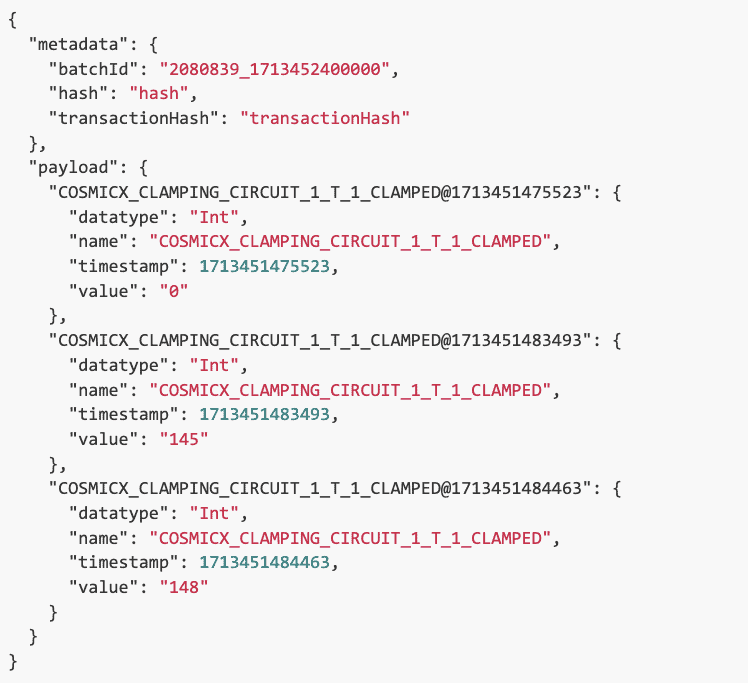

Batch Structure

Considering use case requirements and the limitations of edge and cloud environments in Cosmic-X, we define a data batch as one hour’s worth of sensor data collected from a machine. The figure below shows an extract of a data batch collected from one of the use cases. The batch includes a metadata object used only for the Wallet Service’s business logic. This metadata contains key-value pairs such as the batchId and placeholders for the payload hash and the transaction hash on the Secret Network blockchain. The payload, which the system hashes during anchoring, consists of discrete sensor measurements. Each measurement uses a composite key created by concatenating the variable name with the Unix timestamp of its recording. The measurements include key-value pairs for variable name, timestamp, absolute value, and data type.

The batchId is the most critical part of a data batch. Since the Wallet Service uses it to anchor and later locate the data batch for verification, the batchId must be unique. In this setup, the batchId combines a machine ID with a Unix timestamp representing the time range of measurements in the batch, rounded to the nearest hour. For example, if machine 2080839 collects measurements from 11:01:23 to 11:59:43 on May 16, 2024, the batchId becomes 2080839_1715853600.

In the next post, we’ll showcase how we integrated the Wallet Service with three live machines and an AI service to enable secure and accurate anomaly detection in machine components.

Curious how our blockchain-based data-integrity solution can help your business? Check out our one-pager for a quick overview of its key benefits!